Data Upload

Gives users a way to bring their own data onto the platform. For each time series users wish to use with the platform, users will need to upload it using this tool. Once uploaded, use the data all across Chronos, like using it in a forecast or as the base series in a new scenario.

Before Uploading

Preparing Data

In order to make a forecast using algorithms, data is required. There are various styles of organizing time series data but here Chronos has the requirement for a formatted 'date' column and then other columns containing the series values.

Before uploading the data, it is advisable to manually scan the imported data for errors. Sometimes when data is converted to new formats, the values are truncated or corrupted. Usually looking at a few things, particularly the min, mean, max, and count of the data is sufficient to detect any major problems. Review the data again after upload.

Add metadata to uploads when possible. It is useful for remembering just what that series was when reviewed months later. It is also important to be aware of as much context as possible about the series. Where do these numbers come from?

Two of the harder-to-detect data problems are incomplete recent data and data definition changes. Incomplete recent data is common where data has to be reviewed or is gradually arriving as requests are processed. Incompleteness also occurs based on when a forecast is performed. Performing a monthly forecast mid-month will often draw in incomplete data for the current month, appearing like a drastic and unexpected drop. The solution is usually to adjust the date range to remove the more recent data, or to manually adjust to expectations.

Data definition changes are another frequent challenge. Business units get combined or separated and the resulting data suddenly is much larger or smaller than before. Again there is no automatic approach, and the solution is usually to adjust the date range to drop the data, or to manually adjust the data if possible.

Data format

We currently support .csv and .xlsx file uploads and the primary way for users to bring their own data onto the platform. This is usually the first stop a user makes before starting to use the rest of the platform.

Formatting your data before bringing it on to the platform is essential. We've done as much as we can to accept many different formats and variations of data but this step is none-the-less important. Tools like Excel or Google Sheets are good places to work with your time series data to ensure the formatting is correct. Often there are utilities to transform data all at once so the process should be quick and easy.

Data should look like the tables below: column name "date" must be present, however the "values" column name can be called anything

Daily data

| date | values |

|---|---|

| 2022-01-01 | 42.1 |

| 2022-01-02 | 40.8 |

| 2022-01-03 | 38.6 |

| 2022-01-04 | 42.8 |

| ... | ... |

Monthly data

| date | values |

|---|---|

| 2022-01 | 42.1 |

| 2022-02 | 40.8 |

| 2022-03 | 38.6 |

| 2022-04 | 42.8 |

| ... | ... |

Yearly data

| date | values |

|---|---|

| 2018 | 42.1 |

| 2019 | 40.8 |

| 2020 | 38.6 |

| 2021 | 42.8 |

| ... | ... |

In some cases you'll want to bring in more than one series at a time, for example with Batch forecasting. To specify additional series, add the values as a new column while maintaining a single date column. If the series' date ranges do not line up, that is okay. Include all the dates need and cells where a series doesn't have values can be left blank.

An example of this could look like the following:

Daily data

| date | series_one | series_two |

|---|---|---|

| 2022-01-01 | 42.1 | |

| 2022-01-02 | 40.8 | |

| 2022-01-03 | 38.6 | 115.2 |

| 2022-01-04 | 42.8 | 110.0 |

| 2022-01-05 | 110.0 | |

| 2022-01-06 | 110.0 | |

| ... | ... | ... |

The table above shows multiple series with differing date ranges. series_one spans from 2022-01-01 to 2022-01-04 and series_two spans from 2022-01-03 to 2022-01-06. Chronos will handle these cases without a problem as long as the date frequency of all the series being uploaded is the same. For example, mixing monthly data and daily data will not work.

Preprocessing

Once you've successfully uploaded some data through the file upload process, there are a few preprocessing options available before the final submission of the data.

Transformations

- Remove outliers: In addition to slicing the data as discussed above, other changes can be performed on the data after upload. Outlier removal is straightforward. Particularly large or small data points are removed if they don't appear to follow the pattern of other data points. Sometimes automatic outlier removal can be overly aggressive, but generally outlier removal is a safe bet in cases where predicting the average values (rather than predicting extremes) is the goal.

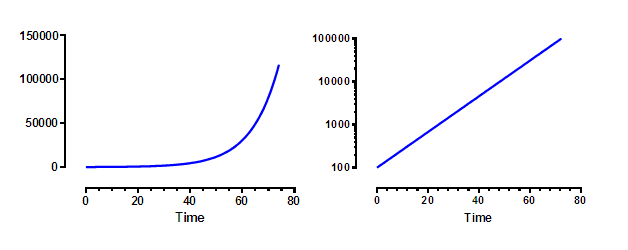

- Convert to log scale: Logs can shift the data to a more linear, more stable series which are commonly used for graphing in scientific publications.Below are two graphs, both showing the same time series but the y-axis on the left is a linear scale while on the right we see the data on a log scale.

- Smoothing: Think of smoothing as a more refined take on outlier removal. It involves reframing each data point as an average of that point and the previous points. No information is lost, but rather changes are more gradually diluted across time. Generally a little bit of smoothing, like 7 step smoothing for daily data, is beneficial for models, essentially preventing the model from overthinking small fluctuations. For short term forecasting and where forecasts are viewed on a highly granular level, with each day examined closely, smoothing is more often avoided. The longer the horizon and more high-level the use of the forecast, then the more smoothing will be beneficial.The chart below shows some example daily data where the effect of 7-day, 30-day, and 90-day smoothing is applied. The greater the smoothing period the greater the smoothness of the data.

- Resampling: A different approach than smoothing, but with a similar goal of attempting to take data that is very random into something that is more stable. Data is aggregated to a higher frequency. Daily data is combined to monthly data. The advantage of this approach is essentially the same concept of the central limit theorem. How well some day, say June 21, does on some value may vary greatly depending on the weather, events, construction, and so on, but which across the entire month of June will see the good and bad days combine to a more stable value. Resampling is also useful for very high frequency data, like hourly data. Higher frequency data is often slower to forecast as well as potentially less accurate, so aggregating to the level at which decisions are based is most practical. Resampling should be avoided when it drastically shortens the training history. Three years of daily history is ample data for most models, but only three data points of the same data resampled to an annual cadence is too little for most models.The chart below shows the same data, this time the transformations applied are resampling from daily to monthly to yearly.

Transformations like converting to log scale should be used with particular attention because they shift the values into a different range. Users have to be aware that the forecast values will need to be shifted back, using an exponential function, to be interpreted in the original space.

Graphs in Chronos will often have a normalize option. Trying to view two related series at once, say an exchange rate with values around 1 and total sales value, with values in the 100,000's, would be impossible on the same graph without normalization as there isn't enough room to give both the necessary resolution. Normalization shifts the values into a range where they can be viewed at the same time without losing the basic shape and pattern.